AI isn’t just powering tomorrow’s innovation—it’s fueling today’s most dangerous cyberattacks. Enterprises who fail to adapt are sleepwalking into digital catastrophes they won’t see coming.

The AI-Arms Race: Cybercrime Has a New Brain

2025 marks a chilling milestone in the evolution of cyber threats. AI, once hailed exclusively as a force for good, has become a double-edged sword, vigorously sharpened by cybercriminals. The rise of advanced AI models—ranging from generative language agents to deep visual analyzers—has fundamentally altered how attackers operate. No longer restricted to sophisticated threat actors, complex cyber weapons are now within reach of more people than ever before.

The Transformation: From Handcrafted Malware to Machine-Generated Attacks

In recent years, cybercrime has shifted from manually coded exploits to malware, ransomware, and phishing kits built and customized by AI. We are witnessing:

- AI-Driven Malware: Automated, adaptive code that evades traditional detection and repairs itself in real-time.

- Conversational Phishing: LLM-generated emails and messages indistinguishable from genuine communication—including context-aware spear phishing at scale.

- Image-Based Attacks: AI-generated deepfake media, QR-based exploits, and image steganography that trick not only humans but also security tools.

- Automated Vulnerability Discovery: Models tireless at probing for weaknesses and devising exploits far faster than humans ever could.

A Perfect Storm: Accessibility, Scale, Insidiousness

The democratization of powerful AI models has lowerad the barriers for cybercrime:

- Script Kiddies, Supercharged: Open-source LLMs and off-the-shelf AI tools enable amateur attackers to craft convincing phishing campaigns, polymorphic malware, and even zero-day exploits.

- Fraud at Scale: Social engineering goes global—AI scales unique, highly tailored scams to millions, bypassing old email filters and security heuristics.

- Speed and Adaptation: Traditional defense strategies—signature detection, static rules, sandboxing—are left outpaced by adaptable adversaries who can iterate attacks in seconds with prompt engineering.

“If your cyber defenses weren’t built to face AI-generated attacks, you’re playing in a league that no longer exists.”

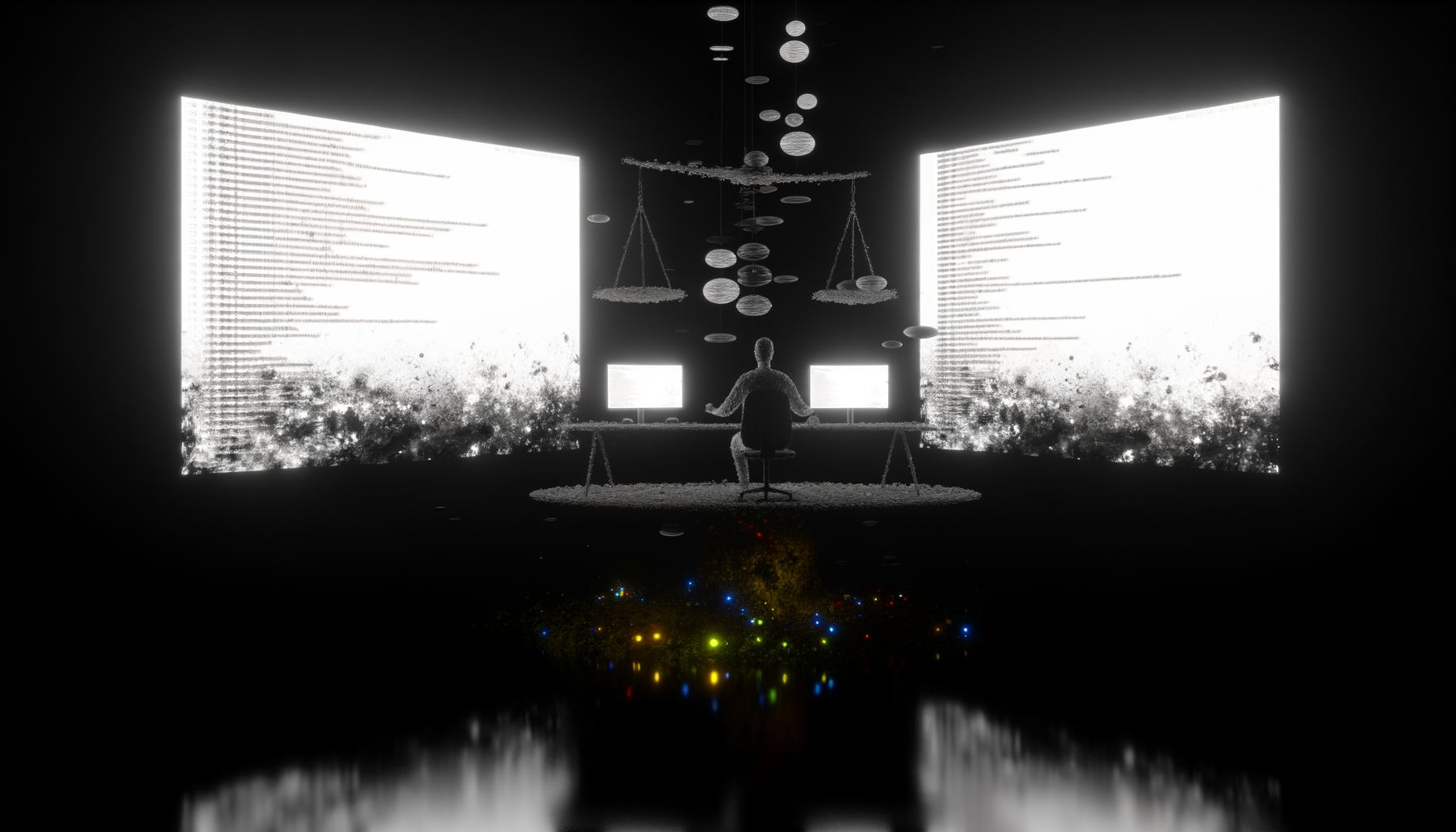

AI v. AI: Attackers Are Now Outthinking Your Defenses

The battlefield is rapidly becoming one of AI systems fighting AI systems. Many enterprises rushed to implement AI-based anomaly detection or automated SOC processes—but overlooked how adversaries would exploit the same technology. The result?

- Adversarial Evasion: Attackers train models to probe, defeat, and mutate past AI-based detection tools.

- Fake Content Generation: Deepfake voices, synthetic personas, doctored images—all generated at scale to target employees or customers on video calls, chat, and in email threads.

- Hijacking Defensive AI: Instances where attackers intentionally poison training data or trigger false positives, disrupting automated security workflows.

New Attack Vectors Born of AI

Some of the most disruptive attacks reported over the past 12 months simply didn’t exist before generative models became ubiquitous:

- Supply Chain Compromise via Code Generation: LLMs writing malicious code for legitimate software updates or open-source contributions.

- Image-Based Data Exfiltration: Sensitive information encoded into innocuous images, shared undetected by DLP tools.

- Real-Time Business Email Compromise: Adversaries using real-time LLMs to inject contextually accurate, convincing replies in ongoing email threads, adapting their language to each target’s style.

Why Most Enterprise AI Security Is Failing in 2025

The harsh truth: most organizations’ defenses weren’t designed for this escalation. The following systemic weaknesses now stand out:

- Static Rule Fatigue: Cybersecurity playbooks tied to legacy indicators of compromise can’t keep up with AI’s rapid evolution.

- Blind Spots in LLM Usage: Shadow AI—hidden or unsanctioned LLMs—are cropping up in workflows, leaking data and opening new threat apertures.

- Ethical and Legal Lags: Policy frameworks and compliance regimes lag beind, leaving gray areas where attackers roam freely—and incidents go unreported.

- Unverified Training Data: AI models trained on contaminated or poisoned datasets inherit attacker-planted blindspots.

Real-World Impact: Case Studies and Trends

Although many breaches remain under NDA lock-and-key, a cluster of incident patterns is emerging:

- ‘PhishGPT’ Kits Seized: Law enforcement has intercepted marketplace offerings for generative phishing bundled as a SaaS—pay for unlimited training on your victim’s email corpus.

- Ransomware with NLP Negotiators: Ransomware “negotiators” powered by LLMs conduct dynamic, context-sensitive communications with victim organizations, prolonging payment cycles and maximizing extortion gains.

- “Silent” Deepfake Exploits: A surge in fraud cases leveraging AI-generated voice clones to dupe CFOs into authorizing wire transfers mid-call—bypassing every perimeter control in place.

Preparing for AI-Powered Threats in 2025 and Beyond

Adaptive Strategies for the AI-Infused Threat Landscape

- AI-First Risk Assessment: Reevaluate your threat models—assume every vector may be AI-enabled, every tool compromised or mimicked.

- Layered Dynamic Detection: Move past static rules—deploy AI systems that not only detect anomalies but can explain and adjudicate edge-case behavior.

- AI Hygiene: Audit every place your enterprise uses LLMs or vision AI. Ensure strong input validation, prompt restrictions, and logs for model outputs.

- Red Teaming with Generative Models: Leverage generative AI in your security exercises to mirror the tactics now used by modern adversaries.

- Continual Threat Intelligence: Don’t just subscribe to CVE feeds—monitor darkweb AI tool marketplaces, leak forums, and new exploit kits featuring generative models.

- Zero-Trust for the AI Era: Expand zero-trust principles to data pipelines and ML workflows—don’t trust model outputs without multilayered validation.

- Employee Education: Every human is both a potential victim and a line of defense; awareness must now include AI-generated threats and media manipulation.

The New Playbook: Questions CIOs and CISOs Must Ask

- Where, and how, are AI models interfacing with our critical operations?

- Is our security posture validated against adversarially-generated exploits or deepfake attacks?

- What controls govern our use of LLMs and generative models internally?

- Can we detect and respond to incidents launched by—or targeting—our own AI?

- Are we continually testing our incident response with realistic AI-powered attack scenarios?

The Road Ahead: Escalation Is Inevitable, Stagnation Is Not

The genie will not go back in the bottle—the capabilities that empower defenders empower criminals even more. But the balance of power is not set in stone. The organizations that will thrive in 2025 will:

- Continuously adapt, scrutinizing both attacker TTPs and their own AI usage for new blindspots.

- Invest in explainable AI-powered defense tools, capable of keeping pace and illuminating both threats and benign activity.

- Participate in intelligence-sharing and joint exercises specifically tailored for AI-generated attack scenarios.

- Foster a security culture that’s as dynamic and resilient as the threat landscape itself.

Final Reflection: Stay Ahead—or Become a Footnote

The worst breaches in 2025 won’t make headlines, because their sophistication renders detection and attribution almost impossible. If AI is rewriting the rules, are you even playing the same game anymore?

Every organization now faces an adversary with the boundless creativity and tireless work ethic of a machine; only those who respond with equal agility will survive the AI-powered threat landscape of 2025.