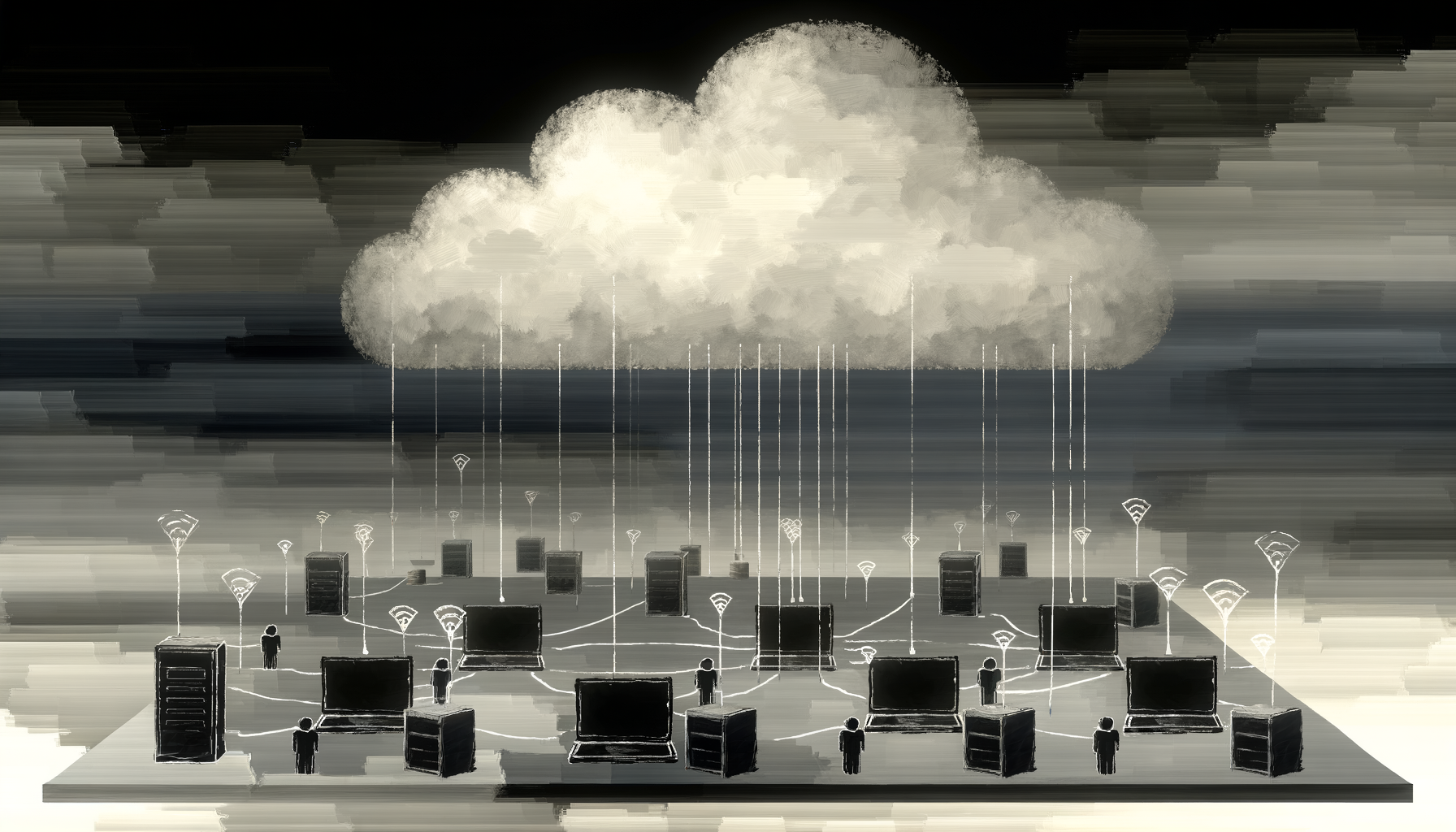

Are cloud AI giants losing their grip? What if the next wave in AI doesn’t live in massive data centers but right at the edge of your own device, out of reach from prying eyes?

Paradigm Shift: Centralized AI’s Dominance Is Under Threat

The prevailing AI infrastructure story has been dominated by the cloud: colossal, proprietary models hosted in hyperscale data centers. These command staggering resources—and even more influence over the downstream users who rely on them. But a tectonic shift is underway.

In 2024, a distinctly different narrative is emerging among enterprises, researchers, and privacy-conscious users. Open-source models—now matching or exceeding the performance of closed counterparts for many tasks—can be fine-tuned, optimized, and deployed beyond the fortress of the cloud. Their new home? Local servers, edge devices, and organization-controlled clusters, far from the grasp of centralized gatekeepers.

The Driving Forces Behind Decentralization

- Data Privacy: Sensitive data seldom leaves local boundaries, minimizing the threat of interception or misuse.

- Autonomy & Control: Organizations customize, update, or audit models without vendor lock-in or opaque updates.

- Latency & Real-Time Performance: Processing happens in milliseconds—vital for industrial, automotive, or medical use-cases—without the round-trip to distant data centers.

- Cost Containment: Avoid unexpected egress or compute charges from cloud providers; scale at your own pace and budget.

- Resilience & Sovereignty: Systems continue operating without connectivity to a central provider, a necessity for mission-critical or remote deployments.

Technical Breakthroughs: Open Source Unleashed at the Edge

This movement isn’t just philosophical—it’s fueled by a relentless stream of engineering breakthroughs. Model distillation, quantization, pruning, and hardware-acceleration libraries now empower even smartphones and microservers to run previously unthinkable inference workloads.

The proprietary cloud AI monopoly is not only challengeable—it’s being directly outflanked where it can’t reach: your edge, your hardware, your data.

Thanks to open-source heavyweights like StableLM, Llama 2, and Mixtral, the blueprint for state-of-the-art local deployment is open, customizable, and rapidly evolving. Open weight models, permissive licensing, and toolchains like ONNX, TensorRT, and GGML have become the go-to for ambitious AI teams wary of being beholden to opaque cloud APIs.

Comparing Centralized vs. Decentralized AI Deployment

| Dimension | Centralized Cloud | Decentralized Edge/Local |

|---|---|---|

| Data Control | Leaves organization | Stays local/end-to-end encrypted |

| Latency | High/unpredictable | Ultra-low, deterministic |

| Customization | Limited/opaque | Full access, self-tuning |

| Upgrades | Provider-driven | User-driven, version lock |

| Sovereignty | Vendor lock-in | Self-owned, portable |

This table drives home that the advantages are not merely academic—they’re transformative for organizations with strict regulatory, real-time, or competitive constraints.

Use Cases Accelerating the Push for On-Prem/Edge AI

It’s not theoretical: multinational banks, automotive OEMs, telecoms, and medical device companies are already pilot-testing or deploying open models on their infrastructure.

- Financial Services: Run LLMs for document processing, anomaly detection, or customer service automation entirely behind the firewall.

- Remote & Industrial IoT: Real-time visual, audio, and sensor inference in factories, vehicles, or field units—where reliance on central connectivity is a dealbreaker.

- Healthcare Providers: On-prem models comply with data residency laws, keeping PHI tightly controlled and enabling fine-tuned medical assistants or diagnostic tools.

- Consumer Electronics: Smart devices powered by locally-optimized AI models enhance privacy and allow continuous operation offline.

Barriers: The Challenges Ahead

No meaningful shift comes without hurdles. Decentralized open-source AI presents both technical and organizational friction:

- Hardware constraints may limit model size or complexity, mandating aggressive optimization.

- The operational responsibility—updating, securing, tuning—shifts to the user organization, requiring new skillsets and processes.

- Interoperability: Edge environments are diverse; seamless deployment across varied platforms demands mature tooling.

Nevertheless, the progress in model optimization techniques and standardized deployment containers (see GGUF, ONNX, Docker for AI) is rapidly closing these gaps.

Why This Is Inevitable: Three Unstoppable Trends

- Regulatory Pressure: Tougher data localization laws render permanent centralization unsustainable for many regulated industries.

- User Demand for Agency: The sophistication and awareness within developer and IT communities ensures transparent, modifiable solutions are in continual demand.

- Breakneck Open-Source Innovation: Unlike proprietary models, the velocity of improvement when thousands of independent teams collaborate will outpace closed development—and empower a flourishing ecosystem of local-first, privacy-driven AI.

Implications for the AI Infrastructure Ecosystem

The big picture? Infrastructure providers—cloud hyperscalers, device OEMs, and edge AI vendors—must rapidly adapt their roadmaps. There is an acute need for:

- Turnkey platforms to deploy, monitor, and secure open-source models at scale across fleets of edge endpoints

- Reference architectures tailored for privacy-centric and regulated sectors

- Continual advances in hardware acceleration and easy-to-integrate model optimization frameworks

Expect to see a surge in offerings: open model “App Stores” for local AI deployments, secure attestation systems for model provenance, and new business models supporting bespoke or industry-specific model fine-tuning inside enterprise perimeters.

The Edge Isn’t Just an Option—It’s Becoming the Default

In the coming years, the most valuable and sensitive AI workloads may never transit the public cloud. Instead, driven by irresistible needs for privacy, efficiency, autonomy, and explainability, the world’s most nimble organizations will bring state-of-the-art AI inside their own walls, or even onto the devices in customers’ hands.

Ask yourself: Would you rather trust your secrets to a remote black box… or build, audit, and operate your own intelligent systems, custom-fit to your risks and mission?

The future is distributed, transparent, and boldly open—wrestling power away from the few, and putting it back where it belongs: with you and your data.

Centralized AI isn’t just fading—it’s being replaced by smarter, localized intelligence where privacy and agency are non-negotiable.